Nils Wedi, Head of Earth System Modelling at ECMWF

Having just attended the 2019 User Workshop at the Oak Ridge Leadership Computing Facility (OLCF), I wanted to discuss some of the advances in supercomputing that were presented there. How do we harness the massive parallelism and computing power for the specific application of numerical weather prediction (NWP) while learning from other disciplines?

Preparing for the hybrid computer architecture of the world’s fastest computer

Oak Ridge, the ‘Secret City’, is steeped in history with its role in the Manhattan Project. Today it houses various science activities and the biggest computer in the world (Top500 list, Nov 2018): Summit. Summit is equipped with about 4600 liquid cooled nodes, each with 2 IBM Power9 (21 core) processors and 6 NVIDIA Volta100 GPUs. It is the latter that allow it to run at (only) approximately 10 MW with its immense number crunching power.

My selfie in front of the Summit cooling system and the front rack (left), and a picture down one of the many aisles (right).

Summit has other exciting features, such as a powerful Mellanox adaptive (nearly congestion free) routing network between nodes, additional GPU direct communication (NVLINK), and approximately 1.6 TB of NVMe burst buffer memory on every one of the 4600 nodes.

Just next door is Titan, which was the fastest computer not so long ago (still in the Top10), which pioneered in 2009 the emerging trend of combining powerful accelerators with more traditional CPUs.

In fact, it has just been announced that Titan will be decommissioned in June to make space for the first US exascale (>1.5 exaflops) computer (available in 2021): Frontier. There was a brief introduction of Frontier at the workshop, which will follow the same design concept of the previous machines, with a hybrid arrangement combining one AMD EPYC processor with 4 purpose-built Radeon Instinct GPUs. There was a clear message to users, if your application runs well on Summit, this should be the best preparation to run well on the Frontier system.

For ECMWF it is very important to prepare and be ready for these hybrid architectures, including efficient use of GPU accelerators. Another clear message was that model developers need to repeatedly think how to adapt to heterogeneous architectures (“whatever it takes”). This typically also involves effective team work rather than individuals, as was customary perhaps 10 years ago.

Optimising the ECMWF Integrated Forecasting System (IFS) on the Summit computer

Even though ECMWF is unlikely to be able to afford the $600 million price tag (the largest ever public procurement for a supercomputer), or the energy bill for the estimated 29 MW needed to run a computer like Summit, we have been successful with the prestigious INCITE19 award “Unprecedented scales with ECMWF’s medium-range weather prediction model”, that allows us to run and optimise our IFS model on Summit. In fact, our INCITE contribution is one of only very few Earth sciences applications (9% of the INCITE allocation). This may indicate that many Earth system model (ESM) codes are simply not ready for these machines and for the undeniable effort required to make weather and climate models run well on them.

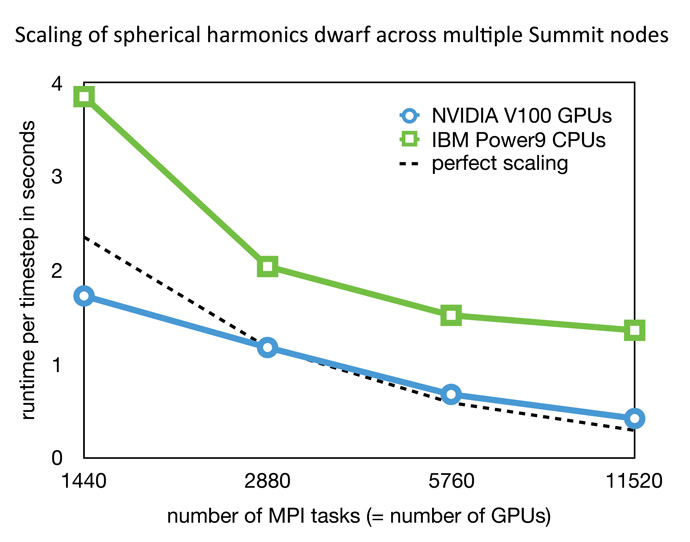

In our project we are running the 1 km global Integrated Forecasting System (IFS) model (both IFS-spectral and IFS-finite volume model), with selected parts on the GPU, such as the spectral transforms, some of the physics or the advection. Preliminary results on the CPU part only already show a time-to-solution speed of up to 112 forecast days per day at 1.45 km resolution across 3840 nodes on Summit (see also Peter Bauer’s recent blog), and the spectral transform dwarf has been run for the first time across 11520 GPUs.

Now we are optimising the IFS further to make good use of the powerful GPUs.

Scaling of spherical harmonics dwarf across multiple Summit nodes, using a hybrid OpenMP/OpenACC/MPI configuration, using GPUdirect and CudaDGEMM/FFT libraries. 11520 MPI tasks in this figure use 1920 nodes.

Other weather and climate projects using the Summit computer

Other work within the INCITE programme is using a 25 km climate model (running on the CPUs), combined with many “super-parametrised” cloud models running at every grid point and realised computationally on individual GPUs.

The other effort in the climate domain “Exascale deep learning for climate analytics” used established machine learning frameworks such as TensorFlow (from Google) and Horovod (from Uber) (and “black art” to achieve convergence of the training network) for massive scale image classification, localisation, object detection and segmentation of large climate datasets (see presentations at the User Workshop). While it clearly was as good as or better than human heuristics, it required 14.4 TF to process one image (i.e. per post-processing step at 25 km horizontal resolution)!

What can we learn from other disciplines?

For example, the computational challenges for imaging the Earth interior down to about 250 km using data assimilation of earthquake activity via massive adjoint tomography calculations is not dissimilar to the challenges and solutions in data assimilation of weather.

While not diminishing the efforts spent in achieving some of the exciting results shown, other applications may be “luckier”, in that the problem being studied is a perfect fit to Summit. One example is the “needle in a haystack” problem when trying to identify the susceptibility to disease of individual humans, with a combinatorial explosion of possible (and a-priori unknown) interactions. Cleverly Joubert and co-authors realised the structural equivalence of similarity methods to the powerful matrix-matrix multiply (GEMM) and its enormous floating point rate potential, which IFS spectral transforms also benefit from.

Other applications using massive-scale tensor algebra (and thus GEMM users) include quantum theory, material sciences in the development of photovoltaic devices, and giant inverse problems in cosmology.

Incidentally, there was also an exciting talk from the authors of BLAS (and LAPACK or MAGMA) libraries on accelerating GEMM with mixed (including half) precision, achieving a factor 4 speed-up despite double precision accuracy of the final result. These could be readily used in IFS. It was also good to note that future supercomputers will continue to accelerate and support key IFS technologies such as matrix multiplies, FFTs (still the workhorse in cosmology and turbulence research), and dense linear algebra operations. Quantum chromodynamics (QCD) research requires the solution of a conjugate gradient, with most time spent in the stencil operation which is also not so different from the key issue in more local solvers in NWP.

Of much interest was also how to manage workflows on Summit (e.g. in ensemble applications with 107 ensemble members, EnTK), and how to enable abstractions of hardware for the development of middleware software. With this came an infinite array of new cybertools and user framework paradigms such as Jupyter notebooks, Kubernetes (the new standard for container orchestration?), Airflow, Spark, Swift, Hadoop, PanDA, EnTK, Radical, Apache BigDataStack, Globus (bulk file transfer), to name just a few that ECMWF needs to engage with in the future.

A peak into the future?

Do you dare to peak into the future beyond the exascale Frontier? Well, hybrid compute nodes are here to stay, but with accelerators that may be called QPU rather than GPU in future, with an API that defines a compute kernel called teleport, and moving it to the accelerator via xacc.teleport (qubits). Qubits are the essential ingredient of logical units on a quantum computer chip (of which several already exist!), and which may be expected to solve more mainstream applications in about 5-10 years.

Finally, there was a presentation on neuromorphic or human brain shaped computing. Spiking neuromorphic systems work on stimulating impulses and event-driven learning networks. They are low precision, low power, massively parallel (and complicated to program), with short term applications in robotics and autonomous vehicles, but potentially stochastically stimulated weather forecasting next?

Acknowledgements

Adaptation to future architectures is team work, and this particular research is supported by Peter Bauer, Sami Saarinen, Andreas Müller, Christian Kühnlein, Willem Deconinck, Peter Düben, Michael Lange, Wayne Gaudin, Patrick Gillies, Olivier Marsden, and Michael Sleigh.

This research used resources of the Oak Ridge Leadership Computing Facility, which is a DOE Office of Science User Facility supported under contract DE-AC05-00OR22725.

The ESiWACE, ESCAPE and ESCAPE-2 projects have received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 675191, 67162 and 800 987, respectively. See also http://www.hpc-escape.eu/ and http://www.hpc-escape2.eu/.

We also gratefully acknowledge access to PizDaint facilitated by Thomas Schulthess and Giuffreda Maria Grazia.