Nils Wedi, Head of Earth System Modelling at ECMWF

A team led by ECMWF scientists Nils Wedi, Peter Bauer and Peter Düben, with collaborator Valentine Anantharaj from Oak Ridge National Laboratory, has just completed the world’s first seasonal timescale global simulation of the Earth’s atmosphere with 1 km average grid spacing. The simulation was run with an adapted version of the ECMWF Integrated Forecasting System (IFS) on Oak Ridge’s Summit computer – the fastest computer in the world (as of November 2019).

Albeit only a single realisation, the 1 km data provide a reference to evaluate the strengths and weaknesses of operational numerical weather prediction (NWP) forecasts provided by ECMWF, that are currently performed at 9 km grid spacing.

The extreme weather realism present in the seasonal 1 km data may also be compared to the representation of weather in climate projections obtained from 1-2 orders of magnitude coarser simulations today. Despite the significant cost, global simulations at resolutions of about 1 km have recently been advocated in an article by Professor Tim Palmer (University of Oxford) and Professor Bjorn Stevens (Max Planck Institute for Meteorology, Hamburg) as a way forward “commensurate with the challenges posed by climate change”.

The data will support future satellite mission planning, as new satellite tools can be evaluated in a realistic global atmosphere simulated with unprecedented detail. The 1 km simulation may also be seen as a prototype contribution to a future ‘digital twin’ of our Earth.

Running on the world’s fastest computer

Summit at Oak Ridge is the fastest computer in the world (Top500 list, Nov 2019), and yet literally next door, its successor is being built which will be 10 times bigger: Frontier, ready in 2021. Both Summit and Frontier are equipped on each compute node with several GPUs (6 NVIDIA Volta 100 vs 4 purpose-built AMD Radeon Instinct GPUs) and multicore CPUs (2 IBM Power9 CPUs vs 1 AMD EPYC CPU). Incidentally, ECMWF is also currently in the process of installing its new supercomputer provided by Atos, based on AMD EPYC processors.

The 4-month long global atmospheric simulation at 1 km grid spacing was conducted as part of an INCITE20 award providing 500,000 node-hours on Summit. The award is one of 47 made by the US INCITE programme for 2020 after a highly competitive selection process. Our choice of IFS model configuration follows earlier sensitivity experiments described in detail in Dueben et al. (2020). Our first results show that the continuously improving hydrostatic NWP model configuration of the IFS performs well even at an average 1 km grid spacing. This seems to challenge a common belief in dynamical meteorology that non-hydrostatic equations would be required at this level of resolution. The impact of non-hydrostatic effects is not known, but our run provides a baseline against which future non-hydrostatic simulations can be measured.

The simulations have been conducted on only a fraction of Summit, at a speed of about one simulated week per day, using 960 Summit nodes with 5760 MPI tasks × 28 threads, which translates into 5.4 seconds per model time step. Thanks in part to the spectral compression of the global data, 4 months of TCo1279 (9 km) output data has a size of about 10 TB and 4 months of 3-hourly 1 km data is about 450 TB. Summit’s other exciting features came in handy for our simulations, such as approximately 1.6 TB of NVMe burst buffer memory on every one of the 4600 nodes, and a powerful Mellanox adaptive (nearly congestion free) routing network between nodes (also featuring in ECMWF’s future machine). The model output is handled by ECMWF’s operational FDB5 object store (Smart et al., 2019), making use of the dedicated NVMe memory. This way, the input/output (I/O) can be completely decoupled from the computation without the need for the IFS I/O server and associated additional nodes.

We are now looking forward to analysing the staggering 33,784,857,600,000 samples of temperature, pressure, humidity, winds, and clouds as illustrated in the simulated satellite images. Compare this with the mere 3,500,000,000 smart phone users in the entire world!

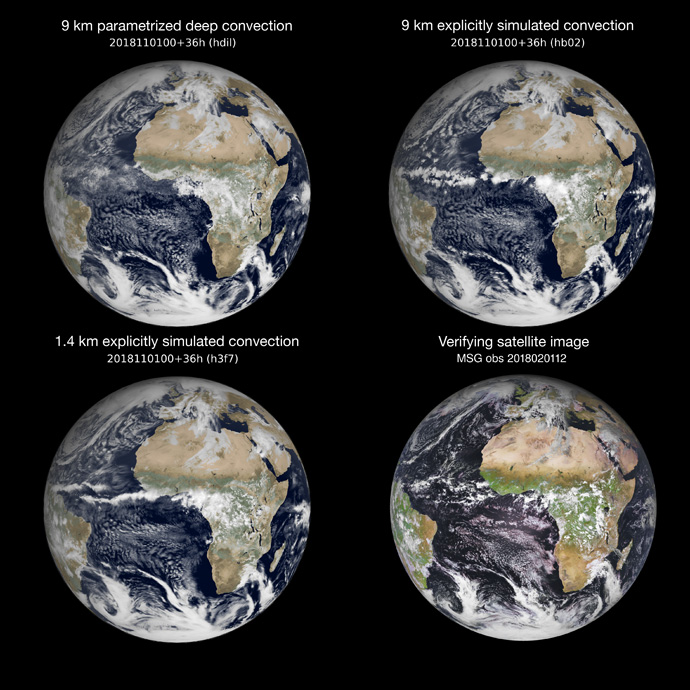

Simulated visible satellite images of the 9 km simulation with parametrized deep convection (top left), the 9 km (top right) and 1 km (bottom left) simulations with explicitly simulated convection, and the verifying visible Meteosat Second Generation satellite image (bottom right) at the same verifying time. Simulations are based on 3-hourly accumulated shortwave radiation fluxes leading to a lack of sharpness (in the image only) compared to the instantaneous satellite picture.

The work continues a history of using the ECMWF model data for observing system simulation experiments (OSSEs), that support future satellite mission planning based on ’nature runs’ for simulating satellite observations that do not exist yet, e.g. the most recent ECO1280.

First results

While building on 40 plus years of NWP experience encapsulated in ECMWF’s IFS, the simulation was nevertheless a step into unknown territory. Will the model be stable over a season? Will there be issues with explicitly resolved convection or the much steeper slopes of topography now showing up? With 9 km grid spacing, the Himalaya mountains reach heights of about 6,000 m in the simulated world. However, with the newly resolved details of topography at 1 km grid spacing, we can now proudly present our ‘Summit certificate’ (pun intended), having completed a climb above 8,000 m with IFS: 8,172 m to be precise, not quite reality but pretty close!

Despite our concerns, all went well and the simulation of the Earth's atmosphere at 1 km grid spacing produced a realistic global mean circulation and intriguingly an improved stratosphere through the resolved feedback of deep convection and topography, and associated resolved Rossby and inertia-gravity waves emanating into the stratosphere. The data also give us direct indications of weather extremes, such as the likelihood of tornadoes, for the first time. The unprecedented 4-month reference dataset will directly support our model development efforts and help to estimate the impact of future observing systems.

Reassuringly, we find that the global energy redistribution is similar in simulations with 1 km grid spacing (with explicit deep convection) and in the simulations with 9 km grid spacing (with parametrized deep convection). Notably, something that we do not readily find if we switch off the deep convection parametrization at coarser resolutions.

In summary, there is so much more to explore in this new world of ‘storm-scale’ resolving global modelling and this reference simulation allowed only a glimpse into an exciting modelling future for our Earth’s weather and climate. But to get there we need more research to enhance model development, the coupling to ocean, waves and atmospheric chemistry, and to continue our efforts of adaptation to novel and energy efficient hardware, while readily embracing emerging technologies.

Further reading

Nils P. Wedi, Peter Dueben, Valentine G. Anantharaj, Peter Bauer, Souhail Boussetta, Philip Browne, Willem Deconinck, Wayne Gaudin, Ioan Hadade, Sam Hatfield, Olivier Iffrig, Philippe Lopez, Pedro Maciel, Andreas Mueller, Inna Polichtchouk, Sami Saarinen, Tiago Quintino, Frederic Vitart (2020). A baseline for global weather and climate simulations at 1 km resolution, submitted to JAMES.

Dueben, P., Wedi, N., Saarinen, S., & Zeman, C. (2020). Global simulations of the atmosphere at 1.45 km grid-spacing with the integrated forecasting system. Journal of the Meteorological Society of Japan. Ser. II.

Smart, S. D., Quintino, T., & Raoult, B. (2019). A high-performance distributed object-store for exascale numerical weather prediction and climate. In Proceedings of the platform for advanced scientific computing conference. New York, NY, USA: Association for Computing Machinery. doi: 10.1145/3324989.3325726

Acknowledgements

This research used resources of the Oak Ridge Leadership Computing Facility (OLCF), which is a DOE Office of Science User Facility supported under Contract DE-AC05-00OR22725. Access to the simulation data can be requested by contacting OLCF Help Desk via email to help@nccs.gov and referring to project CLI900. This work benefited from the close collaboration between high-resolution simulation model benchmarking and advanced methodologies presently being developed for heterogeneous high-performance computing platforms at ECMWF in the ESCAPE-2 (No.800897), MAESTRO (No.801101), EuroEXA (No.754337) and ESiWACE-2 (No.823988) projects funded by the European Union’s Horizon 2020 future and emerging technologies and the research and innovation programmes.

Banner credit: Carlos Jones/ORNL displayed under Creative Commons Attribution 2.0 Generic (CC BY 2.0).