Ioan Hadade specialises in high-performance computing. His skills are essential to make our numerical weather forecasts run efficiently on our high-performance computing facility in Bologna, Italy.

Ioan’s interest in computer science goes back to his high school years in Romania. The Internet was very expensive where he lived. “I became my own small Internet provider by linking up all of my neighbours in a network, which meant that we were sharing the monthly bill, and I was also getting some pocket money in the process,” he says.

“I was always interested to know how things work, and once you start digging deeper, you naturally go from one thing to the next.”

He went on to study computer science at the University of Lincoln in the UK. During that time, his interest in supercomputers was kindled. A master’s course in high-performance computing (HPC) at the University of Edinburgh was followed by a PhD at Imperial College in London.

Ioan at a cluster building challenge event at the University of Edinburgh. (Credit: LiechsWonder Photography)

Ioan briefly was a postdoctoral researcher at the University of Oxford and the University of Surrey. He worked on optimising the speed of computational fluid dynamics codes used by Rolls-Royce for aero engine design.

In 2019, he took up a role as a specialist in HPC at ECMWF. “I knew about ECMWF from my master’s course, where it was mentioned as a premier supercomputing centre, solving interesting problems on very large machines.”

Cost of communication

Ioan’s first task was to minimise the time spent on communication in the computations for an ECMWF weather forecast. “Communication is usually the biggest bottleneck in HPC,” he says. “That is because there are physical limits to how fast information can travel, and moving data around a supercomputer is orders of magnitude more expensive than performing the actual computation.”

The algorithms in ECMWF’s Integrated Forecasting System (IFS) are particularly communication-intensive. “You can break up the problem optimally, for example by doing useful computations on local data while you send and wait for remote data,” he says.

This was a long-standing problem, and a first solution is going to be implemented later this year with Samuel Hatfield and Olivier Marsden from ECMWF and Richard Graham and Dmitry Pekurovsky from NVIDIA.

Upgrades of the Integrated Forecasting System

Since 2021, Ioan has been leading the High-Performance Computing Applications team. “The main responsibility of the team is to ensure that all HPC applications run as efficiently as possible on our HPC systems and, in the context of the EU’s Destination Earth initiative, on EuroHPC systems as well,” he says.

A particular focus is the implementation of upgrades of the IFS on ECMWF’s HPC systems.

“We work with other ECMWF teams when they implement a new operational cycle of the IFS,” Ioan explains. This cycle is initially called the e-suite, short for ‘experimental suite’. “We help them optimise the e-suite to make sure we hit the time-critical window.”

Ioan also helps to troubleshoot any performance issues there may be in operations. “For IFS Cycle 48r1, which was introduced last year, the upgrade to 9 km from 18 km in the ensemble forecast brought with it quite a few challenges that we had to tackle early on,” he explains.

“Some of the tasks in the suite were taking much longer than expected, and together with other teams we had to optimise each one of them to make sure that we adhere to the strict dissemination schedule.”

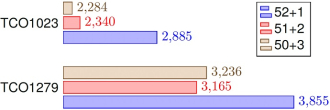

One of Ioan’s tasks before the implementation of IFS Cycle 48r1 was to establish whether it would be possible to go from a horizontal resolution of 18 km (TCo639) to 9 km (TCo1279), rather than the originally planned 11 km (TCo1023). The image shows the impact of varying the number of nodes for I/O operations (where 50+1 means 50 nodes used for the model and 1 for I/O) for a 15-day ensemble forecast run at 11 km and 9 km resolution on the runtime (lower is better). As a result of these early benchmarking activities, the 9 km resolution was implemented for Cycle 48r1.

External project work

His team has recently grown a lot, partly because it supports several external projects. These include EU-funded projects, such as EUPEX and NextGEMS. Ioan and his team are also heavily involved in the EU’s Destination Earth initiative, which is partly implemented by ECMWF.

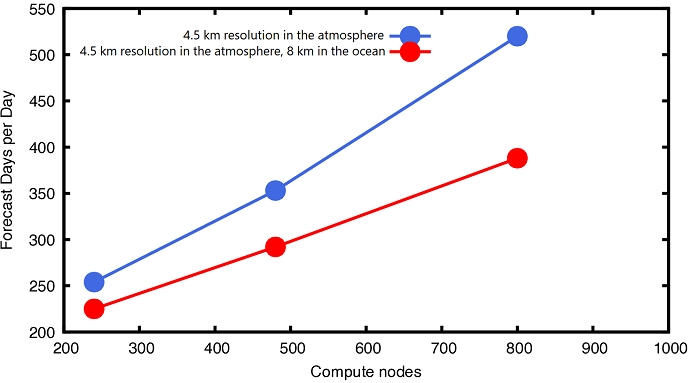

In Destination Earth, ECMWF is operating forecasting systems, including a high-resolution version of the IFS, on several EuroHPC supercomputers. “This involved porting, optimising, troubleshooting and benchmarking the Climate and Extremes Digital Twins on systems that we do not manage,” Ioan says. “This made progress at times particularly challenging, but in the end we were able to deliver what we promised.”

Early results of porting and benchmarking the IFS+FESOM Climate Digital Twin on the MareNostrum 5 supercomputer at the Barcelona Supercomputing Center (BSC).

New technology

Ioan also helps in the procurement of a new HPC system every few years by developing the benchmarks used to assess the computational performance and resiliency of different systems. These benchmarks are commonly known in the community as RAPS, which stands for Real Applications on Parallel Systems.

“Until now, we were in a fortunate position at ECMWF because the majority of our cycles on the HPC were spent running our IFS model,” he says. “This made our life easier from a benchmarking and procurement perspective as it allowed us to procure a machine that was well tuned to run the IFS as efficiently as possible.”

“This is very likely to change in the future with more and more cycles being used to run machine learning workloads. These require different kinds of hardware architecture, such as graphical processing units (GPUs), for best performance,” Ioan explains.

ECMWF’s forecasting system based on machine learning is known as the Artificial Intelligence Forecasting System (AIFS). New computing resources for the AIFS, based on GPUs rather than CPUs (central processing units), are to be procured.

“We created a first AIFS benchmark that we used this year in a Request for Information (RFI),” Ioan says. “We asked vendors for details on their high-performance computing roadmap for the next procurement cycle, and we are also going to create a new set of benchmarks for the Request for Procurement (RFP), in collaboration with the team developing the AIFS.”

“The goal is to get what we need to satisfy our scientific ambitions, at the best possible price, and at the levels of reliability and resiliency that are required for an operational weather centre such as ECMWF.”