| ← prev The ECMWF initial ensemble perturbation strategy |

Contents |

next → Tim’s legacy to ECMWF and many of us |

David Richardson

ECMWF

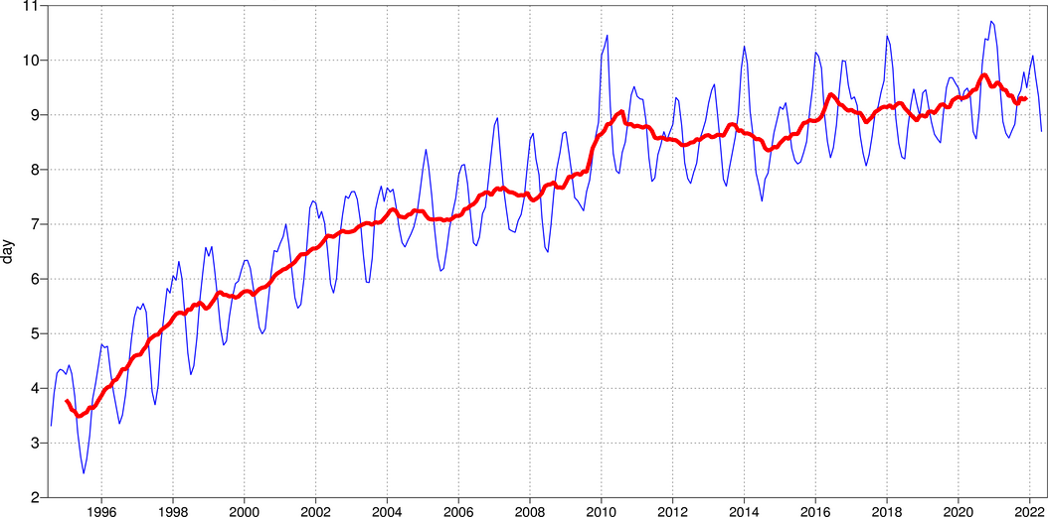

A range of different measures are used to assess the quality of ensemble forecasts (https://www.ecmwf.int/en/forecasts/quality-our-forecasts). Figure 1 shows how the performance of the ECMWF ensemble forecasts (ENS) has progressed over the years. The plot shows the day at which the forecast skill reaches a given threshold (25%) using a standard measure of probabilistic skill, the continuous ranked probability skill score (CRPSS) for 850 hPa temperature over the northern hemisphere. This shows that today we can make forecasts for 9-10 days ahead that are the same quality of those for only 4-5 days ahead in the late 1990s.

Nowadays, the CRPSS is a standard measure of ensemble forecast skill; it is used in several of ECMWF’s headline scores as well as for the World Meteorological Organisation exchange of scores between global centres. However, in the early days of ensemble forecasting such scores were new to many users and difficult to understand. Ensemble forecasts also presented practical challenges – how to make practical real-world decisions when presented with a range of forecast possibilities. Working with Tim Palmer in the late 1990s, we explored the use of a simple cost-loss model of economic decision making to give a useful introduction to the evaluation of forecasts from the user’s point of view. This shows how probabilistic ensemble forecasts can be used to make yes/no decisions and provides an intuitive and practical interpretation of the CRPSS.

Consider a user whose activity is weather-sensitive: if a particular adverse weather event occurs, the user will suffer a loss L, unless they have taken protective action in advance at a cost C. With access to a single (deterministic) forecast, such as ECMWF’s high-resolution HRES forecast, the user can decide to take protective action whenever the adverse weather is forecast. However, this ignores one key element of decision-making – the assessment of risk. As well as the impact (here represented by the loss L), a proper risk assessment also includes a measure of the probability of that event happening. The ENS provides this important missing information. Different users will have different sensitivities to forecast false alarms and missed events; it turns out that a user will gain maximum benefit from using a probabilistic forecast if they take protective action whenever the probability of the event is greater than their cost-loss ratio C/L.

We can summarise the benefit of using the forecast by considering the savings that the user would make by using the forecast, compared to the baseline of only having climate information. We can normalise this to lie between 0 (no better than climate) to 1 (perfect knowledge of the future weather) and refer to this measure as the potential economic value (or ‘value’ for short). The value of the forecast depends on the quality of the forecasts (numbers of false alarms and missed events) but also on the user’s cost-loss ratio (C/L).

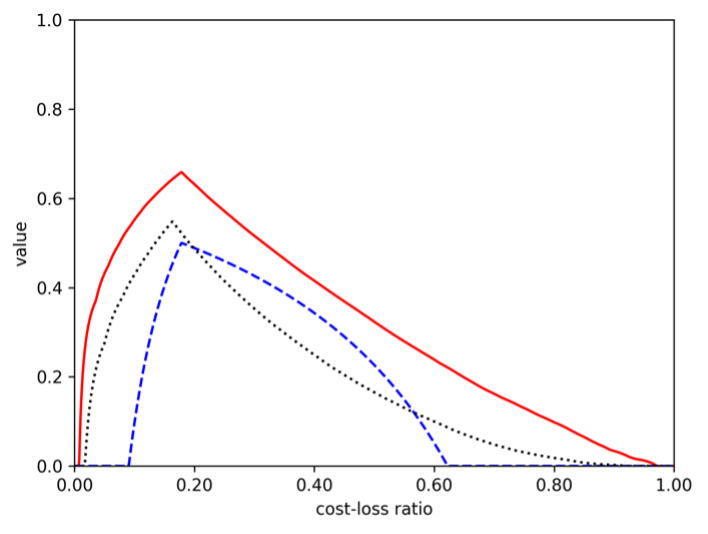

Figure 2 shows the value of the single control forecast and the 50-member ensemble forecast (ENS) predicting 850 hPa temperature anomalies greater than 1 standard deviation. This shows the additional benefit of using the ensemble forecast (red line) compared to using the single deterministic forecast (blue line). Although the value of the forecasts varies between users, the ensemble (used appropriately) provides greater value to a wider range of users.

Several traditional skill scores can be interpreted in terms of the overall value to different groups of users. The average value over an evenly distributed set of users with cost-loss ratios between 0 and 1 is equivalent to a skill score called the Brier skill score (BSS). This is a summary score for a given event (more than 5mm of precipitation in a day, in the above example). If we then take the average BSS over all possible thresholds, we arrive at the CRPSS. In other words, our headline CRPSS score is a measure of the overall value of using the ensemble forecasts for decision-making by a wide range of users in a variety of weather situations.

This decision-making framework continues to help us understand the relevance of different skill scores as well as the potential benefits of different ensemble configurations to users. For example, the BSS is relatively insensitive to increases in ensemble size beyond 30-50 members. However, if the distribution of user cost-loss ratios is more concentrated at lower C/L (as might be expected for high-impact, large potential loss events), then a substantially larger ensemble size can bring significant gains in value. The recently introduced ‘diagonal score’ focuses on the discrimination ability of the ensemble and is relevant for high-impact weather (rare events and users with high potential loss, i.e. low C/L (Ben Bouallegue et al, 2018).

Thanks, Tim, for your drive and vision for the development and exploitation of ensemble forecasts. From making forecast value the focus of your RMetS Symons Memorial Lecture (2001, doi.org/10.1256/0035900021643593), through the more recent article we wrote for the ECMWF Newsletter (Decisions, Decisions; https://www.ecmwf.int/en/elibrary/80120-decisions-decisions) and continuing today, you have been hugely influential in promoting the practical benefits of ensemble forecasts and establishing their use as a now indispensable component of real-world decision-making. Thank you for your constant support, encouragement and promotion of our work on evaluation of ensemble forecasts.

| ← prev The ECMWF initial ensemble perturbation strategy |

↑ top | next → Tim’s legacy to ECMWF and many of us |